requests库入门实操

- 京东商品页面爬取

- 亚马逊商品页面的爬取

- 百度/360搜索关键字提交

- IP地址归属地查询

- 网络图片的爬取和储存

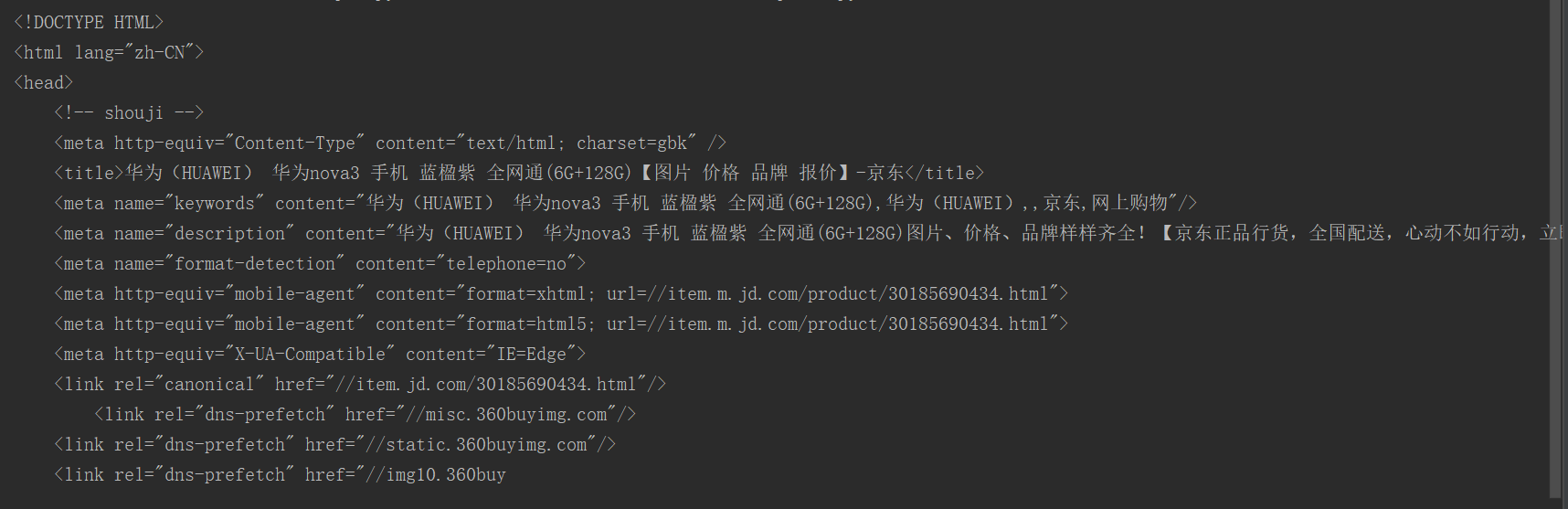

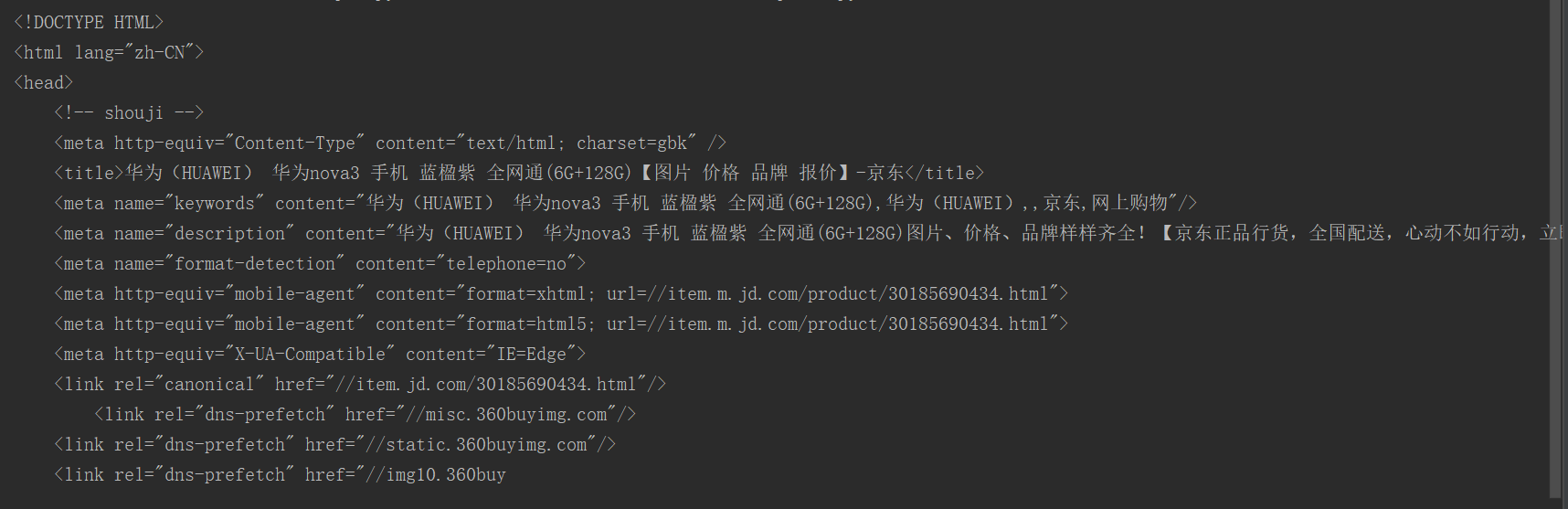

1.京东商品页面的爬取

华为nova3

1

2

3

4

5

6

7

8

9

10

11

12

| import requests

def GetHTMLText(url):

try:

r = requests.get(url)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text[:1000]

except:

print("爬取失败")

if __name__ == '__main__':

url = "https://item.jd.com/30185690434.html"

print(GetHTMLText(url))

|

2.亚马孙商品页面的爬取

某些网站可能有反爬机制。通常的反爬策略有:

- 通过Headers反爬虫

- 基于用户行为反爬虫

- 动态页面的反爬虫

参考

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

import requests

def GetHTMLText(url):

try:

headers = {"user-agent":"Mozilla/5.0"}

r = requests.get(url,headers = headers)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text[:1000]

except:

print("爬取失败")

if __name__ == '__main__':

url = "https://www.amazon.cn/gp/product/B01M8L5Z3Y"

print(GetHTMLText(url))

|

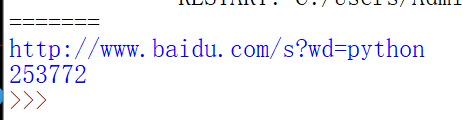

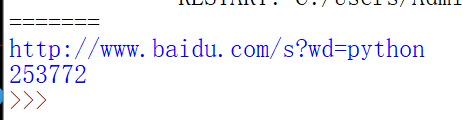

3.百度/360搜索关键字提交

使用params参数,利用接口keyword

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

import requests

def Get(url):

headers = {'user-agent':'Mozilla/5.0'}

key_word = {'wd':'python'}

try:

r=requests.get(url,headers=headers,params=key_word)

r.raise_for_status()

r.encoding = r.apparent_encoding

print(r.request.url)

return r.text

except:

return "爬取失败"

if __name__ == '__main__':

url = "http://www.baidu.com/s"

print(len(Get(url)))

|

4.IP地址归属地查询

使用IP138的API接口

http://m.ip138.com/ip.asp?ip=ipaddress

1

2

3

4

5

6

7

8

9

10

11

12

13

|

import requests

url ="http://m.ip138.com/ip.asp?ip="

ip = str(input())

try:

r= requests.get(url+ip)

r.raise_for_status()

print(r.status_code)

print(r.text[-500:])

except:

print("failed")

|

5.网络图片的爬取和储存

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

import requests

import os

url = "http://n.sinaimg.cn/sinacn12/w495h787/20180315/1923-fyscsmv9949374.jpg"

root = "C://Users/Administrator/Desktop/spider/first week/imgs/"

path = root + url.split('/')[-1]

try:

if not os.path.exists(root):

os.mkdir(root)

if not os.path.exists(path):

r = requests.get(url)

with open(path, 'wb') as f:

f.write(r.content)

f.close()

print("save successfully!")

else:

print("file already exist!")

except:

print("spider fail")

|